Piksor V2 Content Moderation

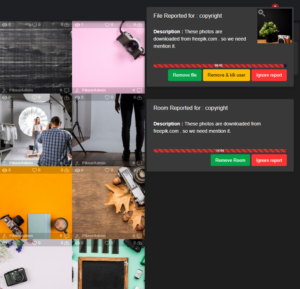

In Piksor v2, we’ve developed a new reporting system to ensure more effective and fair moderation for both room owners and community members. When a user reports a file, a notification is immediately sent to the room owner, displaying the report along with the reported file. The room owner is given 10 minutes to either remove the file or ignore the report. There is no option to close the report notification without taking action, ensuring that the owner must make a decision.

If the owner chooses to remove the file, the system will delete it automatically. If the owner ignores the report, it will be escalated to the moderation team for further review. The same process applies to room reports, but room owners have 15 minutes to act. If the room owner leaves the room without addressing the report, the system will automatically remove the file or the room after the 10 – 15-minute window expires.

In each case, the report is logged in our database to monitor how members and room owners respond, preventing potential abuse of the reporting system.

But what happens if the room owner is the abuser and ignores all reports?

In cases where a room owner is found to be abusing their power or ignoring all reports, we have safeguards in place. The system logs all actions, including the owner’s records, IP addresses, and email addresses. This allows us to track any misuse. If an owner consistently ignores reports and allows violations to continue, we can take serious action, including issuing a hard ban. This means the user would be permanently removed from the platform, preventing them from returning (a method we’ve already successfully used with multiple accounts).

Why is the Moderation System Designed This Way?

We implemented this system to empower all members of the community by giving them a role in moderation. Instead of relying on a small team of 10 moderators for the entire platform, Piksor v2 allows every room member to take part in ensuring the community remains safe and respectful. This decentralized approach helps us maintain a higher level of moderation across all rooms and provides faster responses to problematic content.

What does the Piksor team do as a service provider? Do you rely solely on the community for moderation?

As a service provider, the Piksor team is fully committed to maintaining a safe and respectful environment for all users. While we rely on community members to help report violations, this is only a part of our overall moderation strategy.

Our moderation system, as described, is designed to assist our moderation team. Currently All of our moderators are based in Europe, and since Piksor operates globally, it’s impossible to have 24/7 moderation coverage every single minute. Piksor v2’s moderation system helps fill this gap, particularly during times when our team may not be online, such as late hours or peak times when the number of rooms exceeds our small team’s capacity.

To further ensure compliance with our platform’s rules, we also use “🤖Piksorbot”, an automated moderation bot. Piksorbot regularly joins rooms, taking random samples of content to check for violations of our terms of service. This combination of community-based reporting, automated monitoring, and a dedicated moderation team allows us to keep the platform safe and secure for all users.

Why don’t you recruit more moderators from different time zones?

Recruiting more moderators from other time zones is definitely part of our plan. In fact, we’ve already developed the necessary tools to make this transition smoother. With just a click, we can change a user’s role from member to moderator. However, instead of rushing to recruit moderators, we are currently monitoring user behavior to find community members who consistently report issues in a helpful and fair way.

Once we identify reliable reporters, we extend an invitation to join our moderation team. This ensures that we’re bringing in moderators who genuinely care about the community and can handle the responsibility.

Over the past eight months, our AI moderation system has flagged over 300 users as false reporters—individuals who abused the system by submitting misleading or irrelevant reports. In one extreme case, this abuse led our AI to incorrectly flag an elderly woman image as a child . Due to these false reports, we decided to disconnect the AI moderation system and introduce the Piksor v2 reporting system, which allows for more accurate human review with assistance from our automated processes.

This approach ensures that our moderators are trustworthy and aligned with our platform’s values, and it helps us avoid unnecessary moderation errors caused by false reporting.

What about transparency? Can you show us what happened to our reports and the actions Piksor took?

Yes, transparency is important to us, and we believe our users should know the outcomes of their reports. That’s why Piksor offers a real-time report tracking feature. You can view all the reports you’ve submitted, along with the room slug and the actions our moderation team or room owners have taken. This includes whether a file was removed, if the report was escalated, or if the room owner ignored it.

Additionally, you can see how many users have been blocked from the platform due to violations. We aim to keep our moderation process as transparent as possible, giving our community insight into how we handle reports and maintain a safe environment.

By providing this live information, you can have confidence that your reports are being reviewed and appropriate action is taken to ensure compliance with our community guidelines.

Moderation Summary :

Total Reports

Blocked Members

Blocked Contents

List of your reports :

| Tracking ID | Room ID | Owner | Category | Uploader Name | Time ( UTC ) | Action |

|---|

Search blog

Recent articles

- Piksor V1 Core Code: Power Your Own Image Sharing Platform September 20, 2024

- Piksor V2 Content Moderation September 13, 2024

- Piksor V2 is Now Live! Welcome to the Next Level of Image Sharing! August 31, 2024

- Piksor V2.0 Testing Phase Begins: Key Updates August 27, 2024

- Recent changes (July 26 2024): August 27, 2024